Abstract

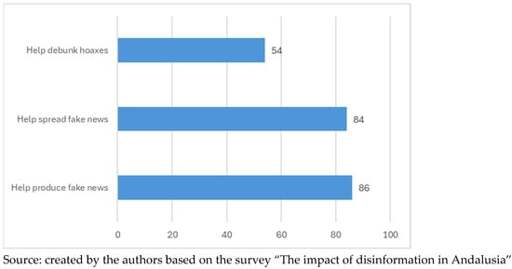

This study addresses public perception of the relationship between artificial intelligence (AI) and disinformation. The level of general awareness of AI is considered, and based on this, an analysis is carried out of whether it may favor the creation and distribution of false content or, conversely, the public perceive its potential to counteract information disorders. A survey has been conducted on a representative sample of the Andalusian population aged 15 and over (1550 people). The results show that over 90% of the population have heard of AI, although it is less well known among the eldest age group (78%). There is a consensus that AI helps to produce (86%) and distribute (84%) fake news. Descriptive analyses show no major differences by sex, age, social class, ideology, type of activity or size of municipality, although those less educated tend to mention these negative effects to a lesser extent. However, 54% of the population consider that it may help in combating hoaxes, with women, the lower class and the left wing having positive views. Logistic regressions broadly confirm these results, showing that education, ideology and social class are the most relevant factors when explaining opinions about the role of AI in disinformation.

I -personally- don’t think so. I also read these regular news articles, claiming OpenAI has clandestinely achieved AGI or their models have developed sentience… And they’re just keeping that from us. And it certainly helps increase the value of their company. But I think that’s a conspiracy theory. Every time I try ChatGPT or Claude or whatever, I see how it’s not that intelligent. It certainly knows a lot of facts. And it’s very impressive. But it also fails often at helping me with more complicated emails, coding tasks or just summarizing text correctly. I don’t see how it is at the brink of AG, if that’s the public variant. And sure, they’re probably not telling all the truth. And they have lots of bright scientists working for them And they like some stuff to stay behind closed curtains. Most likely how they violate copyright… But I don’t think they’re that far off. They could certainly make a lot of money by increasing the usefulness of their product. And it seems to me like it’s stagnating. The reasoning ability is a huge progress. But it still doesn’t solve a lot of issues. And I’m pretty sure we’d have ChatGPT 5 by now if it was super easy to scale and make it more intelligent.

Plus it’s been 2 weeks that a smaller (Chinese) startup proved other entities can compete with the market leader. And do it way more efficiently.

So I think there is lots of circumstantial evidence, leading me to believe they aren’t far off from what other people do. And we have academic research and workgroups working at it and publishing their results publicly. So I think we have a rough estimate of what issues they’re facing and what AI progress is struggling with. And a lot of those issues are really hard to solve. I think it’s going to take some time until we arrive at AGI. And I think it requires a fundamentally different approach than the current model design.