Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this.)

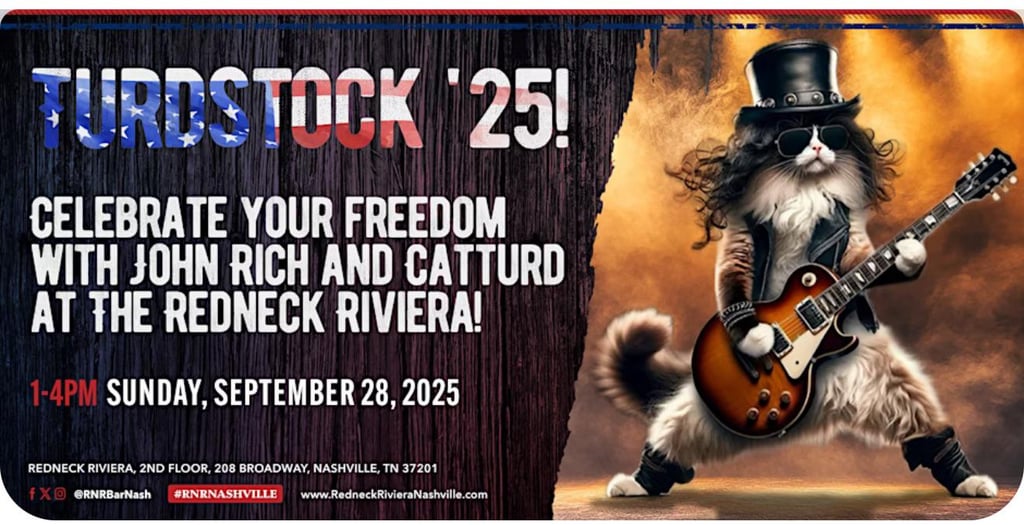

Democratizing graphic design, Nashville style

Description: genAI artifact depicting guitarist Slash as a cat, advertising a public appearance by a twitter poster. The event is titled “TURDFEST 2025”. Also, the cat doesn’t appear to be polydactyl, which seems like a missed opportunity tbh.

Also, the cat doesn’t appear to be polydactyl

truth in advertising: they’re hinting what the music will sound like

Fucking monodactyl ass cat

Turdstock? Wow, the name immediately says this is a festival worth attending! The picture only strengthens the feeling.

Intentionally being on Broadway at 1-4 PM on a Sunday is a whole vibe, and that’s before considering whatever the fuck this is.

The whole thing screams old people desperately trying to be edge and cool but missing all the signifiers. A 'bad’word but baby talk style (like a young child saying poop), reference to slash which was already dated when I was young, the time of day so people can arrive home early and still make dinner (and not late at night like the cool music thing). The headliner is a twitter microceleb and not an actual cool band. But hey, at least kid rock isnt attending, so it escapes the 100% poser feeling.

What in the world is a catturd2 public appearance like, anyway? This sad, drunk old loser shouting random slurs at the crowd?

I can answer that, as turdstock 2024 is on yt, it seems this john rich guy talking about how much he likes catturd, begging for donations (while saying fema is bad), some people spending a lot of time saying nothing (I’m skimming past and there is so much nothing being said), and some country songs (which is not my genre, did notice that for one guy the mix was off, making the music/singing bad to hear, so well done tech team there (they fix it later but still)). And then I saw the audience (they also said millions watched catturd 2023, which considering this vid has 9k views, and there are like 100 people in the audience I doubt (checked turdstock 2023, which seems to only be on rumble 223k views. So lol, double lol, saw the same fucking artists (and the for the same guy the technical issues, poor guy) (and wow rumble sucks compared to yt)). There actually also don’t seem to be that many songs, think half the time was people talking. Also, No Catturd appearance from what I can tell (E: he is there, he just doesn’t do anything it seems, he prob speaks a few minutes I guess). Also really weird bit about how somebody send a mean facebook post and facebook went ‘are you sure this is mean’ and they went all ‘zuck was never punched in the face on the playground … the founding fathers … there was a patriot girl … [5 minutes of talking] … when those redcoats moved on us … etc (this bit took 20 minutes between songs)’. There is also a guy praying for various things on stage, angry raised catholic noises

But yes, can’t judge it on the music, as an event it seemed boring as fuck, too much talking, not enough music, boring audience (none of who were holding drinks), weird political message. Rather watch Kreator for 4 hours, which considering some of the speech clips I hear, seems to be the message they were going for. Somehow the geriatrics in the audience are the most dangerous people because they only want to be left alone (??). No lightshow, no stage presence, no skulls, no fireworks (this is both a comment on the lack of fireworks, and a general comment on what I saw).

Anyway, seems my initial feeling of massive uncool stands.

Also seem they reused the slash cat AI image for 2025.Nope different cat, just same AI slop shit.E: “Ticket Price: $199 plus taxes and fees.” WHAT Wacken metalfest costs 333,00 € and that is for a whole weekend (and actually has a real Slash).

lol holy shit

Thank you for your service!

What is esp interesting is how they make this event sound bigger than it is. The venue is small, but that makes the ‘it was sold out’ show up sooner (also less hard to find enough people who would chuck down 200 bucks for this shit), but then they make claims about the livestream numbers. (which are going to be hard to check) etc. Weird sort of radicalization way to make people think there are more people in their movement than there are. Bit like going ‘catturd2 has 4m followers!’ like those numbers mean much online, esp on zombie twitter (see also John Rich has 150k subs on yt, but averages to 10k plays per non-music video at a quick glance (his music songs do very well however at millions per views (the one song I listened to was also about how Jesus was coming back soon to punish all the evildoers))), so people like the music+message in the music, but not when the guy actually opens his mouth for other stuff, all very vibes stuff. But also just how few actual music is being played vs people making nonsense talks about America/whatever. (and also listening to his live performance, I don’t think John Rich is a good musician (at least not live, the yt clips of him are pretty good, so either he was having a bad day, or autotune stuff).

And it wasn’t that hard, not like I spend that much time on it, it is quite interesting in a way, to look at these kinds of movements and try to see past their propganda and notice who they actually reach and what they do and say. And considering the people they attracted it feels very much a last attempt from a dying generation whos sun has set. (but that could also just be because younger people aren’t as easily scammed into giving away 200 bucks for this).

I had to attend a presentation from one of these guys, trying to tell a room full of journalists that LLMs could replace us & we needed to adapt by using it and I couldn’t stop thinking that an LLM could never be a trans journalist, but it could probably replace the guy giving the presentation.

I’m kind of half looking forward to every soda being sweetened with aspartame or acesulfame potassium, so I can finally quit drinking them. Perhaps blue food might indirectly help people like me eat healthier for a while. Thanks, torment nexus.

Sanders why https://gizmodo.com/bernie-sanders-reveals-the-ai-doomsday-scenario-that-worries-top-experts-2000628611

Sen. Sanders: I have talked to CEOs. Funny that you mention it. I won’t mention his name, but I’ve just gotten off the phone with one of the leading experts in the world on artificial intelligence, two hours ago.

. . .

Second point: This is not science fiction. There are very, very knowledgeable people—and I just talked to one today—who worry very much that human beings will not be able to control the technology, and that artificial intelligence will in fact dominate our society. We will not be able to control it. It may be able to control us. That’s kind of the doomsday scenario—and there is some concern about that among very knowledgeable people in the industry.

taking a wild guess it’s Yudkowsky. “very knowledgeable people” and “many/most experts” is staying on my AI apocalypse bingo sheet.

even among people critical of AI (who don’t otherwise talk about it that much), the AI apocalypse angle seems really common and it’s frustrating to see it normalized everywhere. though I think I’m more nitpicking than anything because it’s not usually their most important issue, and maybe it’s useful as a wedge issue just to bring attention to other criticisms about AI? I’m not really familiar with Bernie Sanders’ takes on AI or how other politicians talk about this. I don’t know if that makes sense, I’m very tired

Not surprised. Making Hype and Criti-hype the two poles of the public debate has been effective in corralling people who get that there is something wrong with the “AI” into Criti-hype. And politicians needs to be generalists so the trap is easy to spring.

Still, always a pity when people who should know better fall into it.

404media posted an article absolutely dunking on the idea of pivoting to AI, as one does:

media executives still see AI as a business opportunity and a shiny object that they can tell investors and their staffs that they are very bullish on. They have to say this, I guess, because everything else they have tried hasn’t worked

We—yes, even you—are using some version of AI, or some tools that have LLMs or machine learning in them in some way shape or form already

Fucking ghastly equivocation. Not just between “LLMs” and “machine learning”, but between opening a website that has a chatbot icon I never click and actually wasting my time asking questions to the slop machine.

It feels like gang initiation for insufferable dorks

It’s distressingly pervasive: autocorrect, speech recognition (not just in voice assistants, in accessibility tools), image correction in mobile cameras, so many things that are on by default and “helpful”

Apparently, for some corporate customers, Outlook has automatically turned on AI summaries as a sidebar in the preview pane for inbox messages. No, nobody I’ve talked to finds this at all helpful.

A thing I recently noticed: instead of showing the messages themselves, the MS Teams application on my work phone shows obviously AI generated summaries of messages in the notification tray. And by “summaries” I mean third person paraphrasings that are longer than the original messages and get truncated anyway.

“Worse than useless” would be an understatement.

This is pure speculation, but I suspect machine learning as a field is going to tank in funding and get its name dragged through the mud by the popping of the bubble, chiefly due to its (current) near-inability to separate itself from AI as a concept.

Remember last week when that study on AI’s impact on development speed dropped?

A lot of peeps take away on this little graphic was “see, impacts of AI development are a net negative!” I think the real take away is that METR, the AI safety group running the study, is a motley collection of deeply unserious clowns pretending to do science and their experimental set up is garbage.

https://substack.com/home/post/p-168077291

(I am once again shilling Ben Recht’s substack. )

While I also fully expect the conclusion to check out, it’s also worth acknowledging that the actual goal for these systems isn’t to supplement skilled developers who can operate effectively without them, it’s to replace those developers either with the LLM tools themselves or with cheaper and worse developers who rely on the LLM tools more.

True. They building city sized data centers and offering people 9 figure salaries for no reason. They are trying to front load the cost of paying for labour for the rest of time.

When you look at METR’s web site and review the credentials of its staff, you find that almost none of them has any sort of academic research background. No doctorates as far as I can tell, and lots of rationalist junk affiliations.

oh yeah that was obvious when you see who they are and what they do. also, one of the large opensource projects was the lesswrong site lololol

i’m surprised it’s as well constructed a study as it is even given that

found on reddit. posted without further comment

Shot-in-the-dark prediction here - the Xbox graphics team probably won’t be filling those positions any time soon.

As a sidenote, part of me expects more such cases to crop up in the following months, simply because the widespread layoffs and enshittification of the entire tech industry is gonna wipe out everyone who cares about quality.

Better Offline was rough this morning in some places. Props to Ed for keeping his cool with the guests.

Oof, that Hollywood guest (Brian Koppelman) is a dunderhead. “These AI layoffs actually make sense because of complexity theory”. “You gotta take Eliezer Yudkowsky seriously. He predicted everything perfectly.”

I looked up his background, and it turns out he’s the guy behind the TV show “Billions”. That immediately made him make sense to me. The show attempts to lionize billionaires and is ultimately undermined not just by its offensive premise but by the world’s most block-headed and cringe-inducing dialog.

Terrible choice of guest, Ed.

Yeah, that guy was a real piece of work, and if I had actually bothered to watch The Bear before, I would stop doing so in favor of sending ChatGPT a video of me yelling in my kitchen and ask it if what is depicted was the plot of the latest episode.

I study complexity theory and I’d like to know what circuit lower bound assumption he uses to prove that the AI layoffs make sense. Seriously, it is sad that the people in the VC techbro sphere are thought to have technical competence. At the same time, they do their best to erode scientific institutions.

My hot take has always been that current Boolean-SAT/MIP solvers are probably pretty close to theoretical optimality for problems that are interesting to humans & AI no matter how “intelligent” will struggle to meaningfully improve them. Ofc I doubt that Mr. Hollywood (or Yud for that matter) has actually spent enough time with classical optimization lore to understand this. Computer go FOOM ofc.

Only way I can make the link between complexity theory and laying off people is thinking about putting people in ‘can solve up to this level of problem’ style complexity classes (which regulars here should realize gets iffy fast). So hope he explained it more than that.

The only complexity theory I know of is the one which tries to work out how resource-intensive certain problems are for computers, so this whole thing sounds iffy right from the get-go.

Yeah but those resource-intensive problems can be fitted into specific classes of problems (P, NP, PSPACE etc), which is what I was talking about, so we are talking about the same thing.

So under my imagined theory you can classify people as ‘can solve: [ P, NP, PSPACE, … ]’. Wonder what they will do with the P class. (Wait, what did Yarvin want to do with them again?)

There’s really no good way to make any statements about what problems LLMs can solve in terms of complexity theory. To this day, LLMs, even the newfangled “reasoning” models, have not demonstrated that they can reliably solve computational problems in the first place. For example, LLMs cannot reliably make legal moves in chess and cannot reliably solve puzzles even when given the algorithm. LLM hypesters are in no position to make any claims about complexity theory.

Even if we have AIs that can reliably solve computational tasks (or, you know, just use computers properly), it still doesn’t change anything in terms of complexity theory, because complexity theory concerns itself with all possible algorithms, and any AI is just another algorithm in the end. If P != NP, it doesn’t matter how “intelligent” your AI is, it’s not solving NP-hard problems in polynomial time. And if some particularly bold hypester wants to claim that AI can efficiently solve all problems in NP, let’s just say that extraordinary claims require extraordinary evidence.

Koppelman is only saying “complexity theory” because he likes dropping buzzwords that sound good and doesn’t realize that some of them have actual meanings.

I heard him say “quantum” and immediately came here looking for fresh-baked sneers

Yeah but I was trying to combine complexity theory as a loose theory misused by tech people in relation to ‘people who get fired’. (Not that I don’t appreciate your post btw, I sadly have not seen any pro-AI people be real complexity theory cranks re the capabilities. I have seen an anti be a complexity theory crank, but that is only when I reread my own posts ;) ).

solve this sokoban or you’re fired

My new video about the anti-design of the tech industry where I talk about this little passage from an ACM article that set me off when I found it a few years back.

In short, before software started eating all the stuff “design” meant something. It described a process of finding the best way to satisfy a purpose. It was a response to the purpose.

The tech industry takes computation as being an immutable means and finds purposes it may satisfy. The purpose is a response to the tech.

p.s. sorry to spam. :)

vid: https://www.youtube.com/watch?v=ollyMSWSWOY pod: https://pnc.st/s/faster-and-worse/8ffce464/tech-as-anti-design

threads bsky: https://bsky.app/profile/fasterandworse.com/post/3ltwles4hkk2t masto: https://hci.social/@fasterandworse/114852024025529148

OpenAI claims that their AI can get a gold medal on the International Mathematical Olympiad. The public models still do poorly even after spending hundreds of dollars in computing costs, but we’ve got a super secret scary internal model! No, you cannot see it, it lives in Canada, but we’re gonna release it in a few months, along with GPT5 and Half-Life 3. The solutions are also written in an atrociously unreadable manner, which just shows how our model is so advanced and experimental, and definitely not to let a generous grader give a high score. (It would be real interesting if OpenAI had a tool that could rewrite something with better grammar, hmmm…) I definitely trust OpenAI’s major announcements here, they haven’t lied about anything involving math before and certainly wouldn’t have every incentive in the world to continue lying!

It does feel a little unfortunate that some critics like Gary Marcus are somewhat taking OpenAI’s claims at face value, when in my opinion, the entire problem is that nobody can independently verify any of their claims. If a tobacco company released a study about the effects of smoking on lung cancer and neglected to provide any experimental methodology, my main concern would not be the results of that study.

This result has me flummoxed frankly. I was expecting Google to get a gold medal this year since last year they won a silver and were a point away from gold. In fact, Google did announce after the fact that they had won gold.

But the OAI claim is that they have some secret sauce that allowed a “pure” llm to win gold and that the approach is totally generic- no search or tools like verifiers required. Big if true but ofc no one else is allowed to gaze at the mystery machine.

Also funny aside, the guy who lead the project was poached by the zucc. So he’s walking out the front door with the crown jewels lmaou.

So recently (two weeks ago), I noticed Gary Marcus made a lesswrong account to directly engage with the rationalists. I noted it in a previous stubsack thread

Predicting in advance: Gary Marcus will be dragged down by lesswrong, not lesswrong dragged up towards sanity. He’ll start to use lesswrong lingo and terminology and using P(some event) based on numbers pulled out of his ass.

And sure enough, he has started talking about P(Doom). I hate being right. To be more than fair to him, he is addressing the scenario of Elon Musk or someone similar pulling off something catastrophic by placing too much trust in LLMs shoved into something critical. But he really should know better by now that using their lingo and their crit-hype terminology strengthens them.

using their lingo and their crit-hype terminology strengthens them

We live in a world where the US vice president admits to reading siskind AI fan fiction, so that ship has probably sailed.

It has but we dont have to make it worse, we can create a small village that resists. Like the one small village in Gaul that resisted the Roman occupation.

Yeah, that metaphor fits my feeling. And to extend the metaphor, I thought Gary Marcus was, if not a member of the village, at least an ally, but he doesn’t seem to actually realize the battle lines. Like maybe to him hating on LLMs is just another way of pushing symbolic AI?

Could also just be environment, pretty hard to stay on one site staunchly if half the people around you are then people your radically oppose.

Also wonder about the only game in town factor

He knows the connectionist have basically won (insofar as you can construe competing scientific theories and engineering paradigms as winning or losing… which is kind of a bad framing), so that is why he pushing the “neurosymbolic” angle so hard.

(And I do think Gary Marcus is right that the neurosymbolic approaches has been neglected by the big LLM companies because they are narrower and you can’t “guarantee” success just by dumping a lot of compute on them, you need actual domain expertise to do the symbolic half.)

I appreciate the reference having read half a dozen Astérix albums in the last few days. I just hope our Alesia has yet to come.

Nikhil’s guest post at Zitron just went up - https://www.wheresyoured.at/the-remarkable-incompetence-at-the-heart-of-tech/

EDIT: the intro was strong enough I threw in $7. Second half is just as good.

Guys, how about we made the coming computer god a fan of Robert Nozick, what could go wrong?

I expect the time right around when the first ASI gets built to be chaotic, unstable, and scary

Somebody should touch grass, or check up on the news.

Tried to read that on a train. Resulted in a nap. Probably more productive use of time anyway.

Found a good security-related sneer in response to a low-skill exploit in Google Gemini (tl;dr: “send Gemini a prompt in white-on-white/0px text”):

I’ve got time, so I’ll fire off a sidenote:

In the immediate term, this bubble’s gonna be a goldmine of exploits - chatbots/LLMs are practically impossible to secure in any real way, and will likely be the most vulnerable part of any cybersecurity system under most circumstances. A human can resist being socially engineered, but these chatbots can’t really resist being jailbroken.

In the longer term, the one-two punch of vibe-coded programs proliferating in the wild (featuring easy-to-find and easy-to-exploit vulnerabilities) and the large scale brain drain/loss of expertise in the tech industry (from juniors failing to gain experience thanks to using LLMs and seniors getting laid off/retiring) will likely set back cybersecurity significantly, making crackers and cybercriminals’ jobs a lot easier for at least a few years.

I have been thinking about the true cost of running LLMs (of course, Ed Zitron and others have written about this a lot).

We take it for granted that large parts of the internet are available for free. Sure, a lot of it is plastered with ads, and paywalls are becoming increasingly common, but thanks to economies of scale (and a level of intrinsic motivation/altruism/idealism/vanity), it still used to be viable to provide information online without charging users for every bit of it. Same appears to be true for the tools to discover said information (search engines).

Compare this to the estimated true cost of running AI chatbots, which (according to the numbers I’m familiar with) may be tens or even hundreds of dollars a month for each user. For this price, users would get unreliable slop, and this slop could only be produced from the (mostly free) information that is already available online while disincentivizing creators from producing more of it (because search engine driven traffic is dying down).

I think the math is really abysmal here, and it may take some time to realize how bad it really is. We are used to big numbers from tech companies, but we rarely break them down to individual users.

Somehow reminds me of the astronomical cost of each bitcoin transaction (especially compared to the tiny cost of processing a single payment through established payment systems).

The big shift in per-action cost is what always seems to be missing from the conversation. Like, in a lot of my experience the per-request cost is basically negligible compared to the overhead of running the service in general. With LLMs not only do we see massive increases in overhead costs due to the training process necessary to build a usable model, each request that gets sent has a higher cost. This changes the scaling logic in ways that don’t appear to be getting priced in or planned for in discussions of the glorious AI technocapital future

With LLMs not only do we see massive increases in overhead costs due to the training process necessary to build a usable model, each request that gets sent has a higher cost. This changes the scaling logic in ways that don’t appear to be getting priced in or planned for in discussions of the glorious AI technocapital future

This is a very important point, I believe. I find it particularly ironic that the “traditional” Internet was fairly efficient in particular because many people were shown more or less the same content, and this fact also made it easier to carry out a certain degree of quality assurance. Now with chatbots, all this is being thrown overboard and extreme inefficiencies are being created, and apparently, the AI hypemongers are largely ignoring that.

I’ve done some of the numbers here, but don’t stand by them enough to share. I do estimate that products like Cursor or Claude are being sold at roughly an 80-90% discount compared to what’s sustainable, which is roughly in line with what Zitron has been saying, but it’s not precise enough for serious predictions.

Your last paragraph makes me think. We often idealize blockchains with VMs, e.g. Ethereum, as a global distributed computer, if the computer were an old Raspberry Pi. But it is Byzantine distributed; the (IMO excessive) cost goes towards establishing a useful property. If I pick another old computer with a useful property, like a radiation-hardened chipset comparable to a Gamecube or G3 Mac, then we have a spectrum of computers to think about. One end of the spectrum is fast, one end is cheap, one end is Byzantine, one end is rad-hardened, etc. Even GPUs are part of this; they’re not that fast, but can act in parallel over very wide data. In remarkably stark contrast, the cost of Transformers on GPUs doesn’t actually go towards any useful property! Anything Transformers can do, a cheaper more specialized algorithm could have also done.