Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this)

At work, I’ve been looking through Microsoft licenses. Not the funniest thing to do, but that’s why it’s called work.

The new licenses that have AI-functions have a suspiciously low price tag, often as introductionary price (unclear for how long, or what it will cost later). This will be relevant later.

The licenses with Office, Teams and other things my users actually use are not only confusing in how they are bundled, they have been increasing in price. So I have been looking through and testing which licenses we can switch to a cheaper, without any difference for the users.

Having put in quite some time with it, we today crunched the numbers and realised that compared to last year we will save… (drumroll)… Approximately nothing!

But if we hadn’t done all this, the costs would have increased by about 50%.

We are just a small corporation, maybe big ones gets discounts. But I think it is a clear indication of how the AI slop is financed, by price gauging corporate customers for the traditional products.

There’s got to be some kind of licensing clarity that can be actually legislated. This is just straight-up price gouging through obscurantism.

Character.AI Is Hosting Pedophile Chatbots That Groom Users Who Say They’re Underage

Three billion dollars and its going into Character AI AutoGroomer 4000s. Fuck this timeline.

automated grooming is just what progress is and you have to accept it. like the printing press

AI finally allowing grooming at scale is the kind of thing I’d expect to be the setup for a joke about Silicon Valley libertarians, not something that’s actually happening.

Just needs a guy who goes “if we don’t build the automated grooming machine, somebody else will”.

I heard the Chinese have a grooming machine that… actually I don’t think I want to finish that joke.

Ah yes the (Content warning, Jordan B peterson, and NSFW) Jordan B Peterson china tweet. And yes, it was real, don’t detox from drugs people!

Hi, guys. My name is Roy. And for the most evil invention in the world contest, I invented a child molesting robot. It is a robot designed to molest children.

You see, it’s powered by solar rechargeable fuel cells and it costs pennies to manufacture. It can theoretically molest twice as many children as a human molester in, quite frankly, half the time.

At least The Rock’s child molesting robot didn’t require dedicated nuclear power plants

I’ve seen people defend these weird things as being ‘coping mechanisms.’ What kind of coping mechanism tells you to commit suicide (in like, at least two different cases I can think of off the top of my head) and tries to groom you.

OK to start us off how about some Simulation Hypothesis crankery I found posted on ActivityPub: Do we live in a computer simulation? (Article), The second law of infodynamics and its implications for the simulated universe hypothesis (PDF)

Someone who’s actually good at physics could do a better job of sneering at this than me, but I mean but look at this:

My law can confirm how genetic information behaves. But it also indicates that genetic mutations are at the most fundamental level not just random events, as Darwin’s theory suggests.

A super complex universe like ours, if it were a simulation, would require a built-in data optimisation and compression in order to reduce the computational power and the data storage requirements to run the simulation.

This feels like quackery but I can’t find a goal…

But if they both hold up to scrutiny, this is perhaps the first time scientific evidence supporting this theory has been produced – as explored in my recent book.

There it is.

Edit: oh God it’s worse than I thought

The web design almost makes me nostalgic for geocities fan pages. The citations that include himself ~10 times and the greatest hits of the last 50 years of physics, biology, and computer science, and Baudrillard of course. The journal of which this author is the lead editor and which includes the phrase “information as the fifth state of matter” in the scope description.

Oh God the deeper I dig the weirder it gets. Trying to confirm whether the Information Physics Institute is legit at all and found their list of members, one of whom listed their relevant expertise as “Writer, Roleplayer, Singer, Actor, Gamer”. Another lists “Hyperspace and machine elves”. One very honestly simply says “N/A”

The Gmail address also lends the whole thing an air of authority. Like, you’ve already paid for the domain, guys.

OK this membe list experience is just 👨🍳😗👌

- Psychonaut

- Practitioner of Yoga

- Quantum, Consciousness, Christian Theology, Creativity

Perfect. No notes.

I haven’t seen qualifications this relevant and high-quality since “architects and engineers for 9/11 truth.”

the terrible tryfecta

Still a bit sad we are not doing nano anymore.

Wait for AI and Crypto 2.0 to burn out, we’ll get there

I had a flash of a vision of tomorrow, it is Nano crypto AI

Sadly it seems the next one is gonna be Quantum.

You see, nano is real now and boring

But things being real doesn’t stop the cranks. See quantum.

Quantum superpredicting machines are not real, and that’s what they’re about. Nano- has lots of uninteresting bs like ultraefficient fluorescent things, but nanomachines are not and that was interesting to them (until they got bored)

Finally computer science is a real field, there are cranks! Suck it physics and mathematics, we are a real boy now!

I love the word cloud on the side. What is 6G doing there

6G nanometer-wave, gently caressing your mitochondria thanks to the power of antiferromagnets and BORIS:

Has this person turned up shilling their book on Coast to Coast AM with George Noory yet? If not, I think it’s a lock for 2025

Despite the lack of evidence, this idea is gaining traction in scientific circles as well as in the entertainment industry.

lol

General sneer against the SH: I choose to dismiss it entirely for the same reason that I dismiss solipsism or brain-in-a-vat-ism: it’s a non-starter. Either it’s false and we’ve gotta come up with better ideas for all this shit we’re in, or it’s true and nothing is real, so why bother with philosophical or metaphysical inquiry?

The SH is catnip to “scientific types” who don’t recognize it as a rebrand of classical metaphysics. After all, they know how computers work, and it can’t be that hard to simulate the entire workings of a universe down to the quark level, can it? So surely someone just a bit smarter than themselves have already done it and are running a simulation with them in it. It’s basically elementary!

Ha very clever, but as quantum level effects only occur when somebody is looking at it, they dont have to simulate it at quark level all the time. I watched what the bleep do we know, im very smart.

If you think about it, a slice of pizza is basically a computer that simulates a slice of pizza down the quark level.

You’re missing the most obvious implication, though. If it’s all simulated or there’s a Cartesian demon afflicting me then none of you have any moral weight. Even more importantly if we assume that the SH is true then it means I’m smarter than you because I thought of it first (neener neener).

But this quickly runs into the ‘don’t create your own unbreakable crypto system’ problem. There are people out there who are a lot smarter who quickly can point out the holes in these simulation arguments. (The smartest of whom go ‘nah, that is dumb’ sadly I’m not that enlightened, as I have argued a few times here before how this is all amateur theology, and has nothing to do with STEM/computer science (E: my gripes are mostly with the ‘ancestor simulation’ theory however)).

The “simulation hypothesis” is an ego flex for men who want God to look like them.

Since the Middle ages we’ve reduced God’s divine realm from the glorious kingdom of heaven to an office chair in front of a computer screen, rather than an office chair behind it.

I don’t have the time to deep dive this RN but information dynamics or infodynamics looks to be, let’s say, “alternative science” for the purposes of trying to up the credibility of the simulation hypothesis.

How sneerable is the entire “infodynamics” field? Because it seems like it should be pretty sneerable. The first referenced paper on the “second law of infodynamics” seems to indicate that information has some kind of concrete energy which brings to mind that experiment where they tried to weigh someone as they died to identify the mass of the human soul. Also it feels like a gross misunderstanding to describe a physical system as gaining or losing information in the Shannon framework since unless the total size of the possibility space is changing there’s not a change in total information. Like, all strings of 100 characters have the same level of information even though only a very few actually mean anything in a given language. I’m not sure it makes sense to talk about the amount of information in a system increasing or decreasing naturally outside of data loss in transmission? IDK I’m way out of my depth here but it smells like BS and the limited pool of citations doesn’t build confidence.

I read one of the papers. About the specific question you have: given a string of bits s, they’re making the choice to associate the empirical distribution to s, as if s was generated by an iid Bernoulli process. So if s has 10 zero bits and 30 one bits, its associated empirical distribution is Ber(3/4). This is the distribution which they’re calculating the entropy of. I have no idea on what basis they are making this choice.

The rest of the paper didn’t make sense to me - they are somehow assigning a number N of “information states” which can change over time as the memory cells fail. I honestly have no idea what it’s supposed to mean and kinda suspect the whole thing is rubbish.

Edit: after reading the author’s quotes from the associated hype article I’m 100% sure it’s rubbish. It’s also really funny that they didn’t manage to catch the COVID-19 research hype train so they’ve pivoted to the simulation hypothesis.

Oh the author here is absolutely a piece of work.

Here’s an interview where he’s talking about the biblical support for all of this and the ancient Greek origins of blah blah blah.

I can’t definitely predict this guy’s career trajectory, but one of those cults where they have to wear togas is not out of the question.

Not only is the universe a simulation, the Catholics just had it right, isnt that neat.

I sneered that in a blog post last year, as it happens.

“feel free to ignore any science “news” that’s just a press release from the guy who made it up.”

In particular, the 2022 discovery of the second law of information dynamics (by me) facilitates new and interesting research tools (by me) at the intersection between physics and information (according to me).

Gotta love “science” that is cited by no-one and cites the author’s previous work which was also cited by no one. Really the media should do better about not giving cranks an authoritative sounding platform, but that would lead to slightly fewer eyes on ads and we can’t have that now can we.

i mean, the Ray Charles one sounds fun. My 1st year maths lecturer demonstrated the importance of not dividing by zero by mathematically proving that if 1=0, then he was Brigitte Bardot. We did actually applaud.

You’re doing the

lord’ssimulation-author’s work, my friend.

If you’re in the mood for a novel that dunks on these nerds, I highly recommend Jason Pargin’s If This Book Exists, You’re in the Wrong Universe.

https://en.wikipedia.org/wiki/If_This_Book_Exists,_You're_in_the_Wrong_Universe

It is the fourth book in the John Dies at the End series

oh damn, I just gave the (fun but absolute mess of a) movie another watch and was wondering if they ever wrote more stories in the series — I knew they wrote a sequel to John Dies at the End, but I lost track of it after that. it looks like I’ve got a few books to pick up!

Someone (maybe you) recommended this book here awhile back. But it’s the fourth book in a series so I had to read the other three first and so have only just now started it.

now seeing EAs being deeply concerned about RFK running health during a H5N1 outbreak

dust specks vs leopards

The way many of the popular rat blogs started to endorse Harris in the last second before the US election felt a lot like an attempt at plausible deniability.

Sure we’ve been laying the groundwork for this for decade, but we wanted someone from our cult of personality to undermine democracy and replace it with explicit billionaire rule, not someone with his own cult of personality.

If H5N1 does turn into a full-blown outbreak, part of me expects it’ll rack up a heavier deathtoll than COVID.

Anyone here read “World War Z”? There’s a section there about how the health authorities in basically all countries supress and deny the incipient zombie outbreak. I think about that a lot nowadays.

Anyway the COVID response, while ultimately better than the worst case scenario (Spanish Flu 2.0) has made me really unconvinced we will do anything about climate change. We had a clear danger of death for millions of people, and the news was dominated by skeptics. Maybe if it had targetted kids instead of the very old it would have been different.

It’s not just systemic media head-up-the-assery, there’s also the whole thing about oil companies and petrostates bankrolling climate denialism since the 70s.

When I run into “Climate change is a conspiracy” I do the wide-eyed look of recognition and go “Yeah I know! Have you heard about the Exxon files?” and lead them down that rabbit hole. If they want to think in terms of conspiracies, at least use an actual, factual conspiracy.

andrew tate’s “university” had a leak, exposing cca 800k usernames and 325k email addresses of people that failed to pay $50 monthly fee

entire thing available at DDoSectrets, just gonna drop tree of that torrent:

├── Private Channels │ ├── AI Automation Agency.7z │ ├── Business Mastery.7z │ ├── Content Creation + AI Campus.7z │ ├── Copywriting.7z │ ├── Crypto DeFi.7z │ ├── Crypto Trading.7z │ ├── Cryptocurrency Investing.7z │ ├── Ecommerce.7z │ ├── Health & Fitness.7z │ ├── Hustler's Campus.7z │ ├── Social Media & Client Acquisition.7z │ └── The Real World.7z ├── Public Channels │ ├── AI Automation Agency.7z │ ├── Business Mastery.7z │ ├── Content Creation + AI Campus.7z │ ├── Copywriting.7z │ ├── Crypto DeFi.7z │ ├── Crypto Trading.7z │ ├── Cryptocurrency Investing.7z │ ├── Ecommerce.7z │ ├── Fitness.7z │ ├── Hustler's Campus.7z │ ├── Social Media & Client Acquisition.7z │ └── The Real World.7z └── users.json.7zyeah i studied defi and dropshipping at andrew tate’s hustler university

statements dreamed up by the utterly deranged

“Yeah I thought about going into civil engineering but the department of hustling really spoke to me y’know?”

i have never felt imposter syndrome since

nikhil suresh, probably

I’m just curious how many hits you would get if you searched for ‘4 hour work week’, as iirc that is where all these people stole the idea from. (well, not totally, the idea they are stealing is selling others the idea of the 4 hour work week, but I hope you get what I mean, 4 hour work weeks all the way down).

See, isn’t the 4-hour work week one of those “just make other people work 50+hours a week on your behalf and take the money they’ve earned for it” schemes? This looks much broader rather than being married to a specific sub-scam. Like, if crypto is down they can sell drop shipping. If drop shipping is cringe they can sell AI slop monetization. If Amazon tightens their standards and starts locking out AI stuff they can go back to crypto.

It’s in the same genre of trying to monetize being a conspicuous asshole, but it is one of the more complex evolutions, at least compared to the standard grift-luencer.

I think it is that book yes, it started a lot of these things, and a lot of these people (im generalizing here, but the Tates are imho part of a long line of manosphere people who all do this kind of stuff, selling others courses into dropshipping, courses into setting up passive income streams, courses into getting laid/mindset etc. And it seems like the only thing they manage to really sell is courses. I don’t think it is that complex an evolution more like the natural progression, of course they get into crypto and AI slop. I think the only real change of somebody like Tate vs the other weirdos who did this (somebody like Cernovic was also one of these people for example) is that Tate has a little bit of charisma for an important market segment (the under 18 year olds).

obligatory IBCK.

that’s not even that, maybe with exception for tate &co. it’s just shilling shitcoins and selling hopes like that one tiktok spammer mussy described by 404media

alright it’s 14gb of json files let me figure out how to grep it in reasonable way and i’ll get there for now i’ll say that the biggest one (size) in private channels is “crypto trading” (2 gb) then “crypto investing” (1.6gb), while in public channels it’s “the real world” (1.7 gb) and “ecommerce” (0.87 gb)

not many. 2 hits for “4 hour workweek” and 35 for “4 hour work week” another 4 for “4-hour workweek” and 5 for “4-hour work week”

Thanks!

Ugh. Tangentially related: a streamer I follow has been getting lots of people in her chat saying that one of the taters wants to hire her. I’ve started noticing comments like “I love white culture” and weird fantasies about the roman empire. Historically she’s also been asked multiple times what her ethnicity is (she is white), specifically if she is scandanavian, which I am starting to view under some kind of white supremacist lens. I’ve told her to ignore anything mentioning the taters or “top g” as one of them is known.

Honestly, I’m worried that she could get brigaded by these creeps, even if she shows no response whatsoever.

I mean, according to the charges Tate hiring young women usually meant some variety of sex trafficking and adult video that he took the money for. Tbh the whole space is sufficiently toxic that she ought to start dropping the banhammer judiciously, but IDK what situation is politically economically etc.

The word “deranged” is getting a workout lately, ain’t it?

fwiw i attribute this to the stop doing x meme (that I believe skillissuer is referencing):

been thinking about making one for (memory) safe c++, but unfortunately I don’t know the topic deep enough to make the meme good

One of my favorite meme templates for all the text and images you can shove into it, but trying to explain why you have one saved on your desktop just makes you look like the Time Cube guy

yeah that’s what i was going for

magnificent!

most of the dedicated Niantic (Pokemon Go, Ingress) game players I know figured the company was using their positioning data and phone sensors to help make better navigational algorithms. well surprise, it’s worse than that: they’re doing a generative AI model that looks to me like it’s tuned specifically for surveillance and warfare (though Niantic is of course just saying this kind of model can be used for robots… seagull meme, “what are the robots for, fucker? why are you being so vague about who’s asking for this type of model?”)

Pokemon Go To The War Crimes

Pokemon Go To The Hague

Quick, find the guys who were taping their phones to a ceiling fan and have them get to it!

Jokes aside I’m actually curious to see what happens when this one screws up. My money is on one of the Boston Dynamics dogs running in circles about 30 feet from the intended target without even establishing line of sight. They’ll certainly have to test it somehow before it starts autonomously ordering drone strikes on innocent people’s homes, right? Right?

how come every academic I have worked with has given me some variation of

they already have all of my data, I don’t really care about my privacy

i’m in computer science 🙃

the marketing fucks and executive ghouls who came up with this meme (that used to surface every time I talked about wanting to de-Google) are also the ones who make a fuckton of money off of having a real-time firehose of personal data straight from the source, cause that’s by far what’s most valuable to advertisers and surveillance firms (but I repeat myself)

The thing is, I’m pretty sure the overwhelming majority of the data is effectively worthless out of the online advertising grift. It’s thoughtlessly collected junk sold as data for its own sake.

It works because no one working in advertising knows what a human beings is.

my strong impression is that surveillance advertising has been an unmitigated disaster for the ability to actually sell products in any kind of sensible way — see also the success of influencer marketing, under the (utterly false) pretense that it’s less targeted and more authentic than the rest of the shit we’re used to

but marketing is an industry run by utterly incompetent morally bankrupt fuckheads, so my impression is also that none of them particularly know or care that the majority of what they’re doing doesn’t work; there’s power in surveillance and they like that feeling, so the data remains extremely valuable on the market

When people start going on about having nothing to hide usually it helps to point out how there’s currently no legal way to have a movie or a series episode saved to your hard drive.

I suspect great overlap between the nothing-to-hide people and the people who watch the worst porn imaginable but think incognito mode is magic.

what’s wild is in the ideal case, a person who really doesn’t have anything to hide is both unimaginably dull and has effectively just confessed that they would sell you out to the authorities for any or no reason at all

people with nothing to hide are the worst people

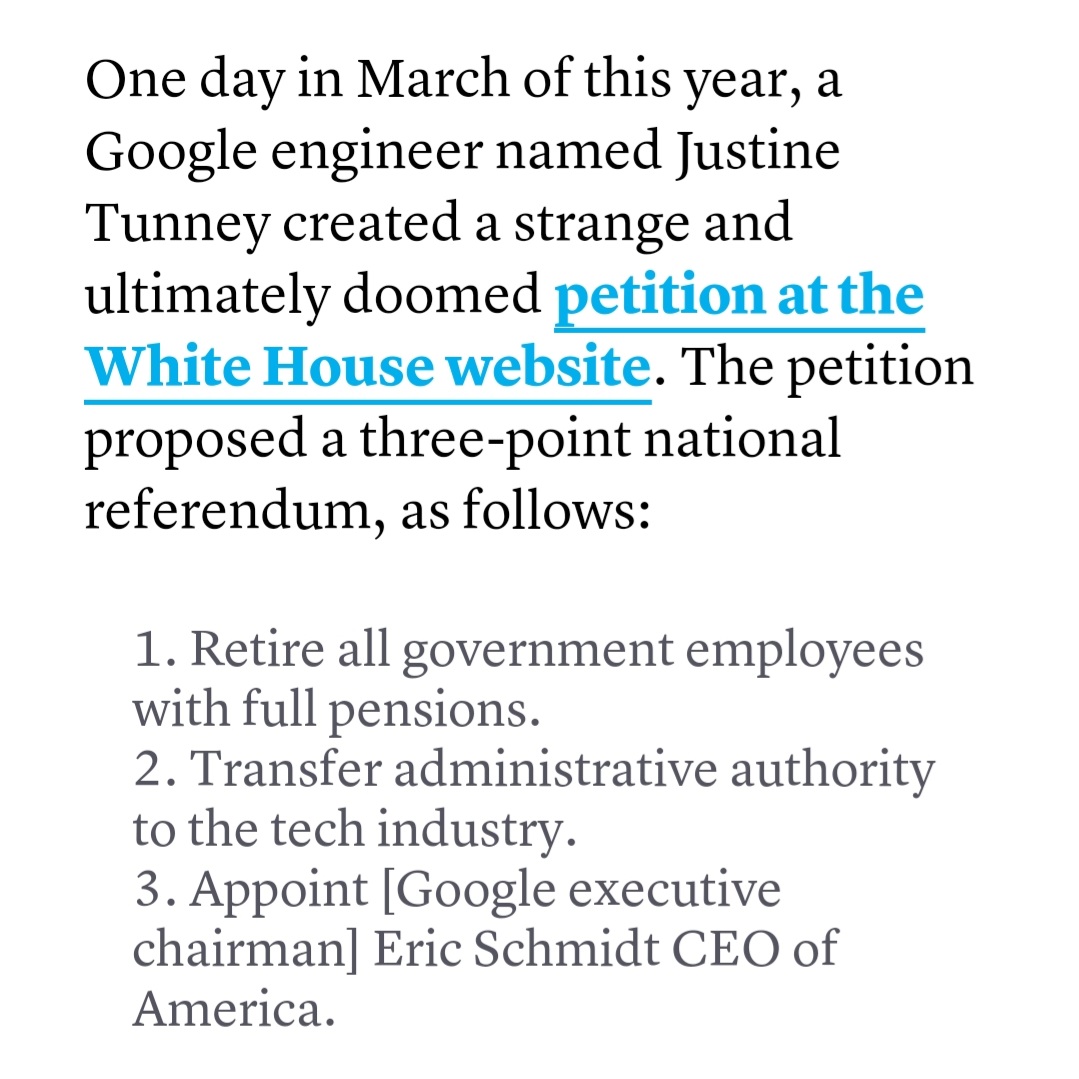

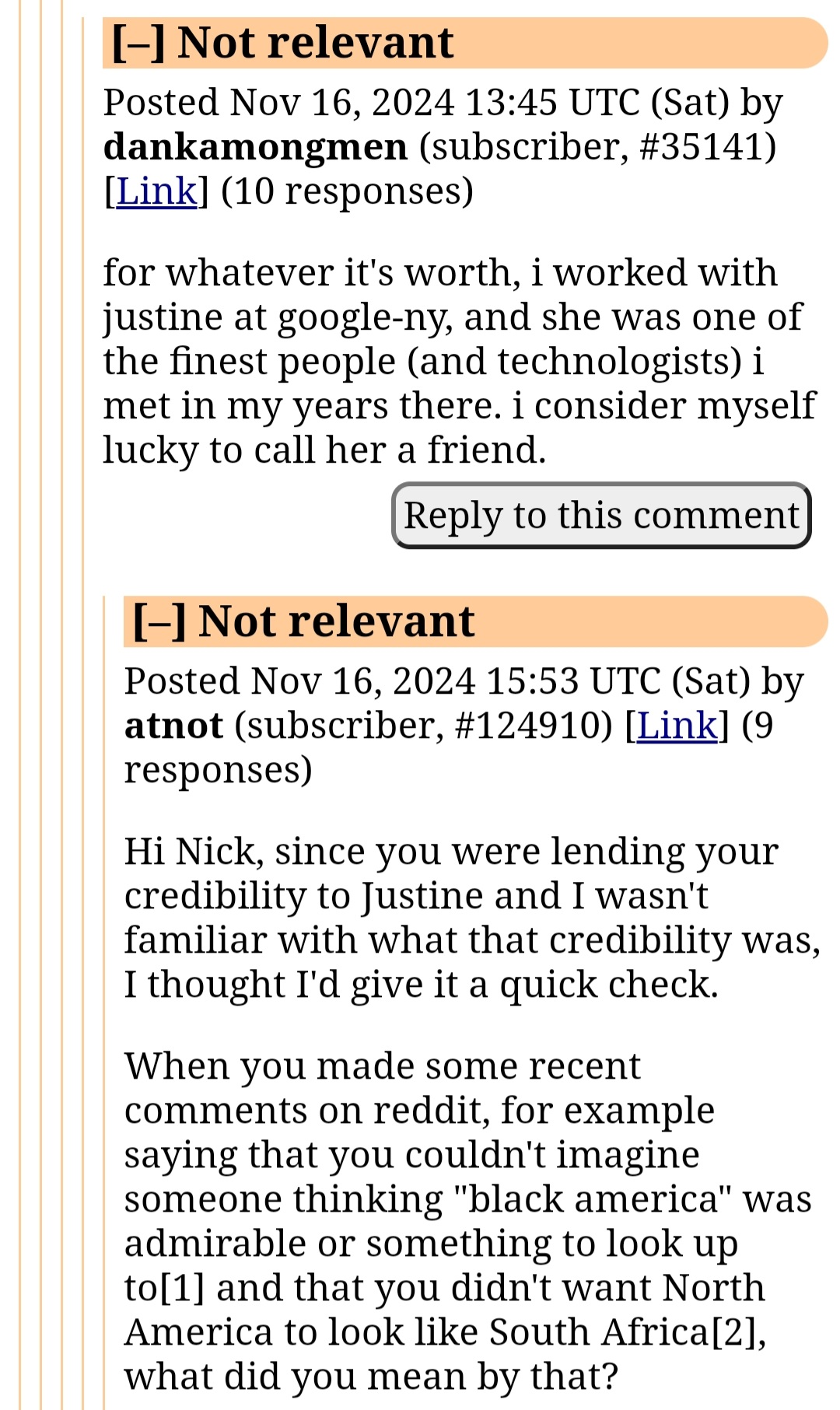

The mask comes off at LWN, as two editors (jake and corbet) dive in to frantically defend the honour of Justine fucking Tunney against multiple people pointing out she’s a Nazi who fills her projects with racist dogwhistles

fuck me that is some awful fucking moderation. I can’t imagine being so fucking bad at this that I:

- dole out a ban for being rude to a fascist

- dole out a second ban because somebody in the community did some basic fucking due diligence and found out one of the accounts defending the above fascist has been just a gigantic racist piece of shit elsewhere, surprise

- in the process of the above, I create a safe space for a fascist and her friends

but for so many of these people, somehow that’s what moderation is? fucking wild, how the fuck did we get here

See, you’re assuming the goal of moderation is to maintain a healthy social space online. By definition this excludes fascists. It’s that old story about how to make sure your punk bar doesn’t turn into a nazi punk bar. But what if instead my goal is to keep the peace in my nazi punk bar so that the normies and casuals keep filtering in and out and making me enough money that I can stay in business? Then this strategy makes more sense.

Is Google lacing their free coffee??? How could a woman with at least one college degree believe that the government is even mechanically capable of dissolving into a throne for Eric Schmidt.

Post by Corbet the editor. “We get it: people wish that we had not highlighted work by this particular author. Had we known more about the person in question, we might have shied away from the topic. But the article is out now, it describes a bit of interesting technology, people have had their say, please let’s leave it at that.”

So you updated the article to reflect this right? padme.jpg

Seems like they’ve actually done this now. There’s a preface note now.

This topic was chosen based on the technical merit of the project before we were aware of its author’s political views and controversies. Our coverage of technical projects is never an endorsement of the developers’ political views. The moderation of comments here is not meant to defend, or defame, anybody, but is in keeping with our longstanding policy against personal attacks. We could certainly have handled both topic selection and moderation better, and will endeavor to do so going forward.

Which is better than nothing, I guess, but still feels like a cheap cop-out.

Side-note: I can actually believe that they didn’t know about Justine being a fucking nazi when publishing this, because I remember stumbling across some of her projects and actually being impressed by it, and then I found out what an absolute rabbit hole of weird shit this person is. So I kinda get seeing the portable executables project, thinking, wow, this is actually neat, and running with it.

Not that this is an excuse, because when you write articles for a website that should come with a bit of research about the people and topic you choose to cover and you have a bit more responsibility than someone who’s just browsing around, but what do I know.

Well, at least they put down something. More than I expected.

And doing research on people? In this economy?

so is corbet the same kind of fucker that’ll complain “everything is so political nowadays”? it seems like they are

Centrists Don’t Fucking Be Like This challenge not achieved yet again

fwiw this link didn’t jump me to a specific reply (if you meant to highlight a particular one)

It didn’t scroll for me either but there’s a reply by this corbet person with a highlighted background which I assume is the one intended to be linked to

Not the only trans NRXer to pull this I’m afraid. I could say things but I really can’t I think.

@dgerard @BlueMonday1984 also, and I know this is way beside the point, update the design of your website, motherfuckers

I don’t run any websites, what are you coming at me for

Man, how do you even find this stuff? :D

tripped over it just reading LWN and went “holy shit”

My professor is typing questions into chat gpt in class rn be so fucking for real

He’s using it to give examples of exam question answers. The embarrassment

I’d pipe up and go “uhhh hey prof, aren’t you being paid to, like, impart knowledge?”

(I should note that I have an extremely deficient fucks pool, and do not mind pissing off fuckwits. but I understand it’s not always viable to do)

It was there and gone fairly quickly and I wouldn’t say I’m a model student so I didn’t say anything. I’ve talked to him about Chat GPT before though…

“So, professor sir, are you OK with psychologically torturing Black people, or do you just not care?”

I mean, that kind of suggests that you could use chatGPT to confabulate work for his class and he wouldn’t have room to complain? Not that I’d recommend testing that, because using ChatGPT in this way is not indicative of an internally consistent worldview informing those judgements.

We’re going to be answering two essay questions in an in-class test instead of writing a paper this year specifically to prevent chat gpt abuse. Which he laughed and joked about because he really believes chat gpt can produce good results !

I’m pretty sure you could download a decent markov chain generator onto a TI-89 and do basically the same thing with a more in-class appropriate tool, but speaking as someone with dogshit handwriting I’m so glad to have graduated before this was a concern. Godspeed, my friend.

gentlemen, this means war

-me imagining myself paying to sit through that

HN runs smack into end-stage Effective Altruism and exhibit confusion

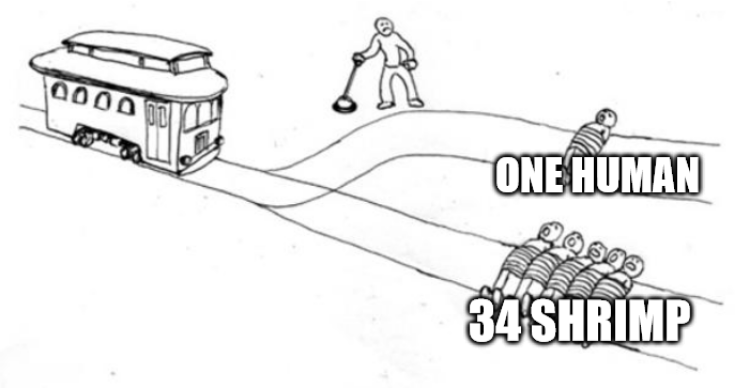

Title "The shrimp welfare project " is editorialized, the original is “The Best Charity Isn’t What You Think”.

This almost reads like an attempt at a reductio ad absurdum of worrying about animal welfare, like you are supposed to be a ridiculous hypocrite if you think factory farming is fucked yet are indifferent to the cumulative suffering caused to termites every time an exterminator sprays your house so it doesn’t crumble.

Relying on the mean estimate, giving a dollar to the shrimp welfare project prevents, on average, as much pain as preventing 285 humans from painfully dying by freezing to death and suffocating. This would make three human deaths painless per penny, when otherwise the people would have slowly frozen and suffocated to death.

Dog, you’ve lost the plot.

FWIW a charity providing the means to stun shrimp before death by freezing as is the case here isn’t indefensible, but the way it’s framed as some sort of an ethical slam dunk even compared to say donating to refugee care just makes it too obvious you’d be giving money to people who are weird in a bad way.

If we came across very mentally disabled people or extremely early babies (perhaps in a world where we could extract fetuses from the womb after just a few weeks) that could feel pain but only had cognition as complex as shrimp, it would be bad if they were burned with a hot iron, so that they cried out. It’s not just because they’d be smart later, as their hurting would still be bad if the babies were terminally ill so that they wouldn’t be smart later, or, in the case of the cognitively enfeebled who’d be permanently mentally stunted.

wat

If we came across very mentally disabled people or extremely early babies (perhaps in a world where we could extract fetuses from the womb after just a few weeks) that could feel pain but only had cognition as complex as shrimp, it would be bad if they were burned with a hot iron, so that they cried out. It’s not just because they’d be smart later, as their hurting would still be bad if the babies were terminally ill so that they wouldn’t be smart later, or, in the case of the cognitively enfeebled who’d be permanently mentally stunted.

wat

This entire fucking shrimp paragraph is what failing philosophy does to a mf

deleted by creator

rat endgame being eugenics again?? no waaay

Did the human pet guy write this

Ohhhh, so this is a forced-birther agenda item. Got it

I think the author is just honestly trying to equivocate freezing shrimps with torturing weirdly specifically disabled babies and senile adults medieval style. If you said you’d pledge like 17$ to shrimp welfare for every terminated pregnancy I’m sure they’d be perfectly fine with it.

I happened upon a thread in the EA forums started by someone who was trying to argue EAs into taking a more forced-birth position and what it came down to was that it wouldn’t be as efficient as using the same resources to advocate for animal welfare, due to some perceived human/chicken embryo exchange rate.

So… we should be vegetarians?

No, just replace all your sense of morality with utilitarian shrimp algebra. If you end up vegetarian, so be it.

Apologies for focusing on just one sentence of this article, but I feel like it’s crucial to the overall argument:

… if [shrimp] suffer only 3% as intensely as we do …

Does this proposition make sense? It’s not obvious to me that we can assign percentage values to suffering, or compare it to human suffering, or treat the values in a linear fashion.

It reminds me of that vaguely absurd thought experiment where you compare one person undergoing a lifetime of intense torture vs billions upon billions of humans getting a fleck of dust in their eyes. I just cannot square choosing the former with my conscience. Maybe I’m too unimaginative to comprehend so many billions of bits of dust.

lol hahah.

Not that I’m a super fan of the fact that shrimp have to die for my pasta, but it feels weird that they just pulled a 3% number out of a hat, as if morals could be wrapped up in a box with a bow tied around it so you don’t have to do any thinking beyond 1500×0.03×1 dollars means I should donate to this guys shrimp startup instead of the food bank!

Shrimp cocktail counts as vegetarian if there are fewer that 17 prawns in it, since it rounds down to zero souls.

Hold it right there criminal scum!

spoiler

Image of two casually dressed guys pointing fingerguns at the camera, green beams are coming out of the fingerguns. The Vegan Police from the movie Scott Pilgrim vs. The World. The cops are played by Thomas Jane and Clifton Collins Jr, the latter is wearing sunglasses, while it is dark.

I was just notified of the corollary that eating 18 shrimp rounds up to cannibalism.

Ah you see, the moment you entered the realm of numbers and estimates, you’ve lost! I activate my trap card: 「Bayesian Reasoning」 to Explain Away those numbers. This lets me draw the「Domain Expert」 card from my deck, which I place in the epistemic status position, which boosts my confidence by 2000 IQ points!

Obviously mathematically comparing suffering is the wrong framework to apply here. I propose a return to Aristotelian virtue ethics. The best shrimp is a tasty one, the best man is a philosopher-king who agrees with everything I say, and the best EA never gets past drunkenly ranting at their fellow undergrads.

Effective Altruism Declares War on the Entire State of Louisiana

a better-thought-out announcement is coming later today, but our WriteFreely instance at gibberish.awful.systems has reached a roughly production-ready state (and you can hack on its frontend by modifying the

templates,pages,static, andlessdirectories in this repo and opening a PR)! awful.systems regulars can ask for an account and I’ll DM an invite link!Oh hey looks like another Chat-GPT assisted legal filing, this time in an expert declaration about the dangers of generative AI: https://www.sfgate.com/tech/article/stanford-professor-lying-and-technology-19937258.php

The two missing papers are titled, according to Hancock, “Deepfakes and the Illusion of Authenticity: Cognitive Processes Behind Misinformation Acceptance” and “The Influence of Deepfake Videos on Political Attitudes and Behavior.” The expert declaration’s bibliography includes links to these papers, but they currently lead to an error screen.

Irony can be pretty ironic sometimes.

Never thought I’d die fighting alongside a League of Legends fan.

Aye. That I could do.

You just know Netflix’s inbox is getting flooded with the absolute worst shit League of Legends players can come up with right now

And having played more LoL than I care to admit in high school, that’s some truly vile shit. If only it actually made it through the filters to whoever actually made the relevant choices.

Stack overflow now with the sponsored crypto blogspam Joining forces: How Web2 and Web3 developers can build together

I really love the byline here. “Kindest view of one another”. Seething rage at the bullshittery these “web3” fuckheads keep producing certainly isn’t kind for sure.

Dude discovers that one LLM model is not entirely shit at chess, spends time and tokens proving that other models are actually also not shit at chess.

The irony? He’s comparing it against Stockfish, a computer chess engine. Computers playing chess at a superhuman level is a solved problem. LLMs have now slightly approached that level.

For one, gpt-3.5-turbo-instruct rarely suggests illegal moves,

Writeup https://dynomight.net/more-chess/

HN discussion https://news.ycombinator.com/item?id=42206817

LLMs sometimes struggle to give legal moves. In these experiments, I try 10 times and if there’s still no legal move, I just pick one at random.

uhh

Battlechess both could choose legal moves and also had cool animations. Battlechess wins again!

Particularly hilarious at how thoroughly they’re missing the point. The fact that it suggests illegal moves at all means that no matter how good it’s openings are the scaling laws and emergent behaviors haven’t magicked up an internal model of the game of Chess or even the state of the chess board it’s working with. I feel like playing games is a particularly powerful example of this because the game rules provide a very clear structure to model and it’s very obvious when that model doesn’t exist.

I remember when several months (a year ago?) when the news got out that gpt-3.5-turbo-papillion-grumpalumpgus could play chess around ~1600 elo. I was skeptical the apparent skill wasn’t just a hacked-on patch to stop folks from clowning on their models on xitter. Like if an LLM had just read the instructions of chess and started playing like a competent player, that would be genuinely impressive. But if what happened is they generated 10^12 synthetic games of chess played by stonk fish and used that to train the model- that ain’t an emergent ability, that’s just brute forcing chess. The fact that larger, open-source models that perform better on other benchmarks, still flail at chess is just a glaring red flag that something funky was going on w/ gpt-3.5-turbo-instruct to drive home the “eMeRgEnCe” narrative. I’d bet decent odds if you played with modified rules, (knights move a one space longer L shape, you cannot move a pawn 2 moves after it last moved, etc), gpt-3.5 would fuckin suck.

Edit: the author asks “why skill go down tho” on later models. Like isn’t it obvious? At that moment of time, chess skills weren’t a priority so the trillions of synthetic games weren’t included in the training? Like this isn’t that big of a mystery…? It’s not like other NN haven’t been trained to play chess…

@gerikson @BlueMonday1984 the only analysis of computer chess anybody needs https://youtu.be/DpXy041BIlA?si=a1vU3zmOWs8UqlSQ

Here are the results of these three models against Stockfish—a standard chess AI—on level 1, with a maximum of 0.01 seconds to make each move

I’m not a Chess person or familiar with Stockfish so take this with a grain of salt, but I found a few interesting things perusing the code / docs which I think makes useful context.

Skill Level

I assume “level” refers to Stockfish’s Skill Level option.

If I mathed right, Stockfish roughly estimates Skill Level 1 to be around 1445 ELO (source). However it says “This Elo rating has been calibrated at a time control of 60s+0.6s” so it may be significantly lower here.

Skill Level affects the search depth (appears to use depth of 1 at Skill Level 1). It also enables MultiPV 4 to compute the four best principle variations and randomly pick from them (more randomly at lower skill levels).

Move Time & Hardware

This is all independent of move time. This author used a move time of 10 milliseconds (for stockfish, no mention on how much time the LLMs got). … or at least they did if they accounted for the “Move Overhead” option defaulting to 10 milliseconds. If they left that at it’s default then 10ms - 10ms = 0ms so 🤷♀️.

There is also no information about the hardware or number of threads they ran this one, which I feel is important information.

Evaluation Function

After the game was over, I calculated the score after each turn in “centipawns” where a pawn is worth 100 points, and ±1500 indicates a win or loss.

Stockfish’s FAQ mentions that they have gone beyond centipawns for evaluating positions, because it’s strong enough that material advantage is much less relevant than it used to be. I assume it doesn’t really matter at level 1 with ~0 seconds to produce moves though.

Still since the author has Stockfish handy anyway, it’d be interesting to use it in it’s not handicapped form to evaluate who won.